Convergence and Compromise

Citations

3rd August 2025

1. Introduction

The previous essay argued for “no easy eutopia”: that only a narrow range of likely futures capture most achievable value, without serious, coordinated efforts to promote the overall best outcomes. A naive inference from no easy eutopia would be that mostly great futures are therefore very unlikely, and the expected value of the future is barely above 0.

That inference would be mistaken. Very few ways of shaping metal amount to a heavier-than-air flying machine, but powered flight is ubiquitous, because human design honed in on the design target. Similarly, among all the possible genome sequences of a certain size, a tiny fraction codes for organisms with functional wings. But flight evolved in animals, more than once, because of natural selection. Likewise, people in the future might hone in on a mostly-great future, even if that’s a narrow target.

In the last essay, we considered an analogy where trying to reach a mostly-great future is like an expedition to sail to an uninhabited island. We noted the expedition is more likely to reach the island to the extent that:

- The island is bigger, more visible, and closer to the point of departure;

- The ship’s navigation systems work well, and are aimed toward the island;

- The ship’s crew can send out smaller reconnaissance boats, and not everyone onboard the ship needs to reach the island for the expedition to succeed.

The previous essay considered (1), and argued that the island is small and far away. This essay will consider ideas (2) and (3): whether future humanity will deliberately and successfully hone in on a mostly-great future. Mapping onto scenarios (2) and (3), we consider two ways in which that might happen:

- First, if there is widespread and sufficiently accurate ethical convergence, where those people who converge on the right moral view are also motivated to promote what’s good overall. We discuss this in section 2.

- Second, if there’s partial ethical convergence, and/or partial motivation to promote what’s good overall, and some kind of trade or compromise. We think this is the most likely way in which we reach a mostly-great future if no easy eutopia is true, but only under the right conditions. We discuss this in section 3.

In section 4, we consider the possibility that even if no one converges onto a sufficiently accurate ethical view, and/or if no one is motivated to promote what’s good overall, we’ll still reach a mostly-great future. In the “sailing” analogy, this would be like thinking that none of the ship’s crew ultimately cares about reaching the island. We argue this is unlikely, if no easy eutopia is true. In section 5, we consider which scenarios are “higher-stakes”, and should thus loom larger in decision-making under uncertainty. In section 6, we conclude.

The considerations canvassed in this essay have led to significant updates in our views. For example, in What We Owe The Future, Will said he thought that the expected value of the future, given survival, was less than 1% of what it might be.1 After being exposed to some of the arguments in this essay, he revised his views closer to 10%; after analysing them in more depth, that percentage dropped a little bit, to 5%-10%. We think that these considerations provide good arguments against extreme views where Flourishing is close to 0, but we still think that Flourishing has notably greater scale than Surviving.

2. Will most people aim at the good?

In this section, we’ll first consider whether, under reasonably good conditions,2 most people with power would converge on an accurate understanding of what makes the future good, and would be significantly motivated to pursue the good.3 We’ll call this widespread, accurate, and motivational convergence, or “WAM-convergence”. Second, we’ll consider how likely it is that we will in fact reach those “reasonably good” conditions. (We’ll here assume that, given WAM-convergence, we will reach a mostly-great future.)

If this idea is right, then even a global dictatorship could have a good chance of bringing about a mostly-great future: under the right conditions, the dictator would figure out what makes the future truly valuable, become motivated to bring about the most valuable futures, and mostly succeed. From feedback on earlier drafts, we’ve found that a surprising number of readers had this view.

In this essay we talk about the idea of promoting the good “de dicto”. This is philosophy jargon which roughly means “of what is said”. It’s contrasted with “de re”, roughly meaning “of the thing itself”. To illustrate, there are two ways in which Alice might want to do what’s best. First, she might want to do some particular act, and happen to believe that act is what’s best, but she wouldn’t change her behaviour if she learned that something else was best. She may be motivated to help the poor, or fight racism, or support a friend, but not moreover to do what’s best, or to contemplate what that may be. If so, then Alice is motivated to do good de re. Second, Alice might want to do some particular act and believe that act is what’s best, but if she learned that something else was best she would change her behaviour. She’s motivated, at least in part, by doing what’s best, whatever that consists in.4 This attitude could also motivate Alice to try to figure out what’s best, with a view to potentially changing the particular things she acts on and cares about.5 When we talk about WAM-convergence, we’re talking about convergence to motivation to promote the good de dicto.

The remainder of section 2 discusses whether WAM-convergence is likely. In section 2.2, we’ll consider and ultimately reject two arguments, based on current moral agreement and moral progress to date, for optimism about WAM-convergence. In section 2.3, we describe three important aspects of a post-AGI world and discuss their upshots. In section 2.4, we give our main argument against expecting WAM-convergence even under reasonably good conditions.

The term “reasonably good conditions” is vague. What we mean is that there are no major blockers to actually producing mostly-great futures, even if most people are motivated to do so. For example, one type of major blocker would be some early lock-in event that makes it impossible for later generations to produce a mostly-great future. We discuss major blockers in section 2.5.

2.2. Moral agreement and progress to date

In this section, we discuss two arguments for optimism about WAM-convergence, based on our current and historical situation. We explain why we’re unconvinced by both.

2.2.1. Current agreement

In the world today, people with very different worldviews nonetheless widely agree on the value of bringing about goods like health, wealth, autonomy, and so on. What’s more, much of the apparent moral disagreement we see is a result of empirical disagreement, rather than fundamental moral disagreement6 — for example, two people might disagree over whether euthanasia should be legalised, but only because they disagree about the likely societal consequences. Because of this, it might seem like, really, humans all broadly share the same values, such that we are already close to WAM-convergence.

However, we think these are good reasons for expecting only very limited kinds of convergence in the future. First, goods like health, wealth and autonomy are instrumentally valuable for a wide variety of goals. For example, both hedonism and preference-satisfaction theories of wellbeing normally agree that good physical health is typically good for a person, even though neither view regards good physical health as intrinsically good.

The problem is that this agreement will likely break down in the future, as we max out on instrumentally valuable goods and instead turn to providing intrinsically valuable good, and as technological advancement allows us to increasingly optimise towards very specific goals, including very specific types of wellbeing.

A life that’s increasingly optimised for maximal hedonic experience will likely begin to look very different from a life that’s increasingly optimised for preference-satisfaction. From each view’s perspective, the value of the preferred life will begin to pull away from the other, and the other might even begin to get worse. For example, perhaps the life which is increasingly optimised for hedonism begins to resemble some kind of undifferentiated state of bliss, with fewer and fewer meaningful preferences at all. With limited optimisation power, both views mostly agreed on the same “low-hanging fruit” improvements, like better physical health. But with more optimisation power, the changes these views want to see in the world become increasingly different.

A second reason why current moral agreement doesn’t provide strong support for WAM-convergence is that people today have strong instrumental reasons to conform, in their moral behaviour and stated beliefs, with the rest of society.7 If you express or act on highly unusual moral views, you run the risk of facing social opprobrium, and make cooperation or trade with others more difficult. Similarly, if one group expresses very different moral views from another, ideologically-motivated coercion or conflict can become more likely. What’s more, pressures to outwardly conform often influence actual prevailing beliefs, at least eventually. To give one illustration: polygamy had been a religiously endorsed and defining feature of the Mormon Church up until the 19th century, but came under intense pressure from the US federal government to end the practice. In 1890, the Church’s president at the time, Wilford Woodruff, conveniently experienced divine revelation that they should prohibit plural marriage, which they did. Now, most Mormons disapprove of polygamy. Other examples include: many countries abolishing slave trading and ownership as a result of pressure from Britain; the long history of religious conversion by conquest; the embrace of democratic values by Germany and Japan (and then most of the rest of the world) after WWII8, and many more.

A third issue is that people currently have done very little in the way of moral reflection. Two people must be close to each other if they started off in the same spot, and walked 10 metres in any direction. But that gives little reason to think that they’ll remain very close to each other if they keep walking for 100 miles — even slight differences in their orientation would lead them to end up very far apart.9

Visualising moral reflection as an at least partially random walk in a space of views. If all views begin very close together, then further reflection seems likely to make resulting views diverge more, on average.

Image

With advanced technology, this issue will get even more extreme, because people will be able to change their nature quite dramatically. Some people might choose to remain basically human, others might choose to remain biological but enhance themselves into “post-humans”, and others might choose to upload and then self-modify into one of a million different forms. They might also choose to rely on different types of superintelligent AI advisors, trained in different ways, and with different personalities. The scope for divergence between people who originally started off very similar to each other therefore becomes enormous.

Finally, even if there is a lot of agreement, that doesn't mean there will be full agreement. Imagine two views that disagree about what’s ultimately valuable both make their own lists of improvements to the world, ordered by priority: “First we solve the low-hanging fruit of easily-curable diseases. Then we eliminate extreme poverty. Then…” Both views might agree on the items nearest the top of both their lists. But eventually — and this point could be very far from the world today — they could simply run out of points of agreement. This would be like reaching a fork in the future: one road leads to a near-best future on the first view, but seals off a near-best future on the other, and vice-versa. And if a mostly-great future is a narrow target, then getting lots of agreement isn’t enough; the remaining disagreements would likely be enough to ensure that each other’s eutopia involves losing most value from the other’s point of view.

And it seems there isn’t anything close to full agreement on moral matters, currently. If we look back to the list of potential future moral catastrophes from the last essay (“No Easy Eutopia”), there’s clearly not a lot of active agreement about what to do about the relevant issues, often because many people just don’t have views about them. But we’ll need to converge on most or all those issues in order for people to agree on what a mostly-great future looks like.

2.2.2. Moral progress

A second reason you might think that it’s likely we’ll get WAM-convergence is based on moral progress to date. At least on many issues, like slavery, civil rights, democracy, attitudes to women and sexual ethics, the world’s prevailing moral views have improved over the last few hundred years. Different moral views also seem to be broadly converging in the direction of liberal democratic values. According to the World Values Survey,10 as societies industrialize and then move into a post-industrial, knowledge-based phase, there's a general shift from “traditional” to “secular-rational” values and from “survival” to “self-expression” values. The world has even so converged on the importance of democracy that most non-democracies pretend to be democratic — a practice that would have looked bizarre even 200 years ago.

Perhaps, then, there is some underlying driver of this moral progress such that we should expect it to continue: for example, if moral progress occurred because people have gotten wiser and more educated over time, that might give us hope for further progress in the future, too. However, there are a number of reasons why this is at best only a weak argument for expecting WAM-convergence.11

First, even if world values seem to be converging, that doesn’t mean they’re converging towards the right views. We are the product of whatever processes led to modern Western values, and so it’s little surprise that we think modern Western values are better than historical values. Our personal values are very significantly influenced by prevailing modern values, and it’s trivial that values have historically trended toward modern values!12,13

There are various possible explanations for the moral convergence we’ve seen. One is that there’s been one big wave of change towards liberal values, but that this is a relatively contingent phenomenon. History could easily have gone differently, and there would have been some other big wave instead: if China had had the industrial revolution, modern values might be less individualistic; if Germany had won WWII, or the USSR had won the Cold War, then modern values might be much more authoritarian. A second alternative explanation is that modern values are the product of the technological and environmental situation we’re in: that a post-industrial society favours liberal and egalitarian moral views, perhaps because societies with such moral views tend to do better economically. But, if so, we shouldn't think that those trends will continue into a post-AGI world, which is very unlike our current world and where, for example, human labour no longer has economic value. A third possible explanation for moral convergence is that globalisation has resulted in greater incentives for moral conformity, in order to reduce the risk of war and make international trade easier. On none of these alternative explanations does what we regard as past moral progress result in continued moral progress into the future.

It’s also easy to overrate the true extent of moral progress on a given moral view. The moral shortcomings of the world today are not necessarily as salient as the successes, and for many issues, it’s socially taboo to point out areas of moral regress — precisely because one is pointing to views that are often currently socially unacceptable or at least morally controversial. It’s therefore easy to overlook the ways in which the world has regressed, morally, by one’s lights.

To see this, consider how total utilitarianism might evaluate humanity today, and consider that social norms around family size have changed dramatically over the last two hundred years, where families are now much smaller than they used to be. From a total utilitarian perspective, this has arguably resulted in an enormous loss of wellbeing to date. Suppose, for example, that the US total fertility rate had never dropped below 4 (which in our actual history it did in the late 19th century). The current US population would be over a billion people, meaning more people to enjoy life in an affluent democracy, and also more people benefiting the rest of the world through (for example) the positive externalities of scientific research.

There are other examples one could also point to, from a utilitarian perspective. Highly safety-cautious attitudes to new technology have limited the development and uptake of socially-beneficial innovations, such as nuclear power and new drugs and medicines. The rise in consumerism means that people spend relatively more on goods that don’t really improve their quality of life. The huge rise of incarceration inflicts enormous human suffering. Globalism has been on the decline in recent decades, impoverishing the world overall. Rates of meat consumption have vastly increased, and the conditions in which we treat animals have worsened: factory farming did not exist in 1900; by 2025 over 70 billion land animals were slaughtered in factory farms.

All these cases are disputable, even on the assumption of utilitarianism. But at the very least they complicate the picture of a steady march of progress, from a utilitarian point of view.14 And a similar case could be made on other moral views.

A third reason for caution about future optimism based on past moral progress is that, even if there has been genuine moral progress, and even if that was driven by some mechanism that reliably delivers moral progress, there’s no guarantee that that mechanism will be enough to deliver all the moral progress we have left to make. For example: society made great strides of progress away from wrongful discrimination, subjugation, and cruelty against groups of people because those groups advocated for themselves — through persuasion, protest, striking, and movement-building. But some wrongs don’t affect groups that can advocate for themselves. Today, humans will slaughter some 200 million chickens. If chickens feel pain and fear, as it seems they do, this seems like a moral catastrophe.15 But we can’t rely on chickens to organise, strike, or speak up for themselves.

Many of the most crucial moral questions we’ll have to answer to get to a near-best future will be questions where we cannot rely on groups to speak up for themselves. For example, it will become possible to design new kinds of beings, like digital minds. We could design them never to complain as they willingly engage in, and even genuinely enjoy, kinds of servitude which might nonetheless be bad.

Then there are questions around population ethics — questions about evaluating futures with different numbers of people. On many views in population ethics, it’s good to create new lives, as long as those lives are sufficiently happy. But people who don’t exist can’t complain about their nonexistence.

And there might be far weirder issues still, which require far more deliberate and concerted efforts to get right — like issues around acausal trade, cooperation in large worlds, or dealing with the simulation hypothesis.

2.3. Post-AGI reflection

We’re considering whether there will ultimately be WAM-convergence. But, given survival, the world will look very different to the present, especially after an intelligence explosion. In this section, we discuss three aspects of the post-AGI world that we think are important to bear in mind when thinking about the possibility of WAM-convergence. These aspects sometimes get used as arguments for optimism about convergence; we think they have some real force in this regard, but that force is limited.

2.3.1. Superintelligent reflection and advice

Access to superintelligence could radically increase the amount of reflection people can do. And this could be a cause for optimism — maybe moral disagreement persists largely because moral reasoning is just too hard for humans to do well and consistently. Here’s Nick Bostrom in Superintelligence:

One can speculate that the tardiness and wobbliness of humanity's progress on many of the “eternal problems” of philosophy are due to the unsuitability of the human cortex for philosophical work. On this view, our most celebrated philosophers are like dogs walking on their hind legs - just barely attaining the threshold level of performance required for engaging in the activity at all.

In the future, we could design superintelligent AI systems to be smarter, more principled, more open-minded, and less prone to human biases than the wisest moral thinkers today. Perhaps AI systems could finally settle those stubborn puzzles of ethics that seem so intractable: we could just ask the AI to figure out which futures are best, tell them to set a course for those futures, and then enjoy the ride to eutopia.

What’s more, as mentioned previously, much apparent moral disagreement might really bottom out in empirical disagreements. Or they could arise from ‘transparent’ errors in reasoning from shared principles, in the sense that they become clear to the person making the error once pointed out. If enough current disagreement ultimately arises from empirical disagreements or transparent reasoning errors, then you could maintain that as long as the future trends towards fewer empirical and conceptual mistakes, it wouldn’t be surprising if most people end up converging toward the same place. And since AI systems seem well-suited to help clarify and resolve clear empirical and logical disagreements, then we should expect future people to have much more accurate empirical beliefs, and far fewer transparent reasoning errors.

It does seem very likely that advanced AI will give society as a whole dramatically more ability to reflect than we have had to date. Sheer cognitive abundance means that each person will be able to run billions of personal reflection processes, each of which simulates scenarios where they were able to reflect for millions of years, from different starting points and with different ways of reflecting. And it seems very likely that AI will ultimately be able to do ethical reasoning better than any human can, and thereby introduce arguments and considerations that human beings might never have thought of.

But that doesn’t mean that people will all or mostly converge to the same place. First, even if different people do all want to act on their post-reflection views of the good (de dicto), the fact that future people will have superintelligent assistance says little about whether we should expect moral convergence upon reflection, from different people who have different ethical starting points and endorse different reflective processes. Different people’s reflective processes might well lead them in very different directions even if they make no empirical or conceptual mistakes. We’ll discuss this more in section 2.4.

Second, people might just be uninterested in acting on the results of an open-ended process of reflecting on the good (de dicto). They might be interested in acting in accordance only with the outcome of some constrained reflective process, that assumes and can’t diverge from some preferred starting beliefs, like environmentalism, some specific religious views, or an overwhelming concern for the interests of biological humans (or a group of humans). As an analogy: when today’s politicians select advisors, they choose advisors who broadly agree with their worldview, and task those advisors with advice on how to execute on that worldview, rather than advice about how their values could be fundamentally off-base.

Alternatively, people might just not be interested in acting in accordance with the result of any kind of ethical reflective process. Suppose that it’s your partner’s birthday and that you want to buy them a gift. You might strongly suspect that after countless years of earnest ethical reflection, you’d reach a different (and motivating) view about how to spend that money, perhaps because you’d become unable to rationally justify giving your partner’s interests any special importance over anyone else’s.16 But would you go along with what your reflective-self recommends?

Third, of course, people might just remain self-interested. If two people want what’s best for them, they might be motivated by the result of reflecting on how to get more of what they want, but it needn’t matter if their AI advisors converge on the same conception of the good, because the good (de dicto) was never motivational for them.

We suspect the most common attitude among people today would either be to reject the idea of reflection on the good (de dicto) as confusing or senseless, to imagine one’s present views as unlikely to be moved by reflection, or to see one’s idealised reflective self as an undesirably alien creature. If people strongly dislike the suggestions of their more-reflective selves, then they probably won’t follow their suggestions. (This idea is discussed more in an essay by Joe Carlsmith).17

The situation becomes a bit less clear if your reflective self has time to walk you through the arguments for why you should change your views. But, even then, I think people will often just not be moved by those arguments — the fact that some argument would change someone’s view if they were given millions of years to reflect (with much greater intellectual capacity, and so on) doesn’t make it likely that that argument would change their view in a short period of time, given their actual cognitive capacity, unless that person already had a strong preference to defer to their reflective selves. Imagine, for example, trying to explain the importance of online privacy to a Sumerian priest in 2,500BCE.

Currently, people generally don’t act as if they are profoundly ignorant about moral facts, or especially interested in discovering and pursuing the good de dicto. People are better described as having a bunch of things they like, including their own power, and they directly want more of those things. We don’t see a reason, on the basis of superintelligent advice alone, for expecting this to change.

2.3.2. Abundance and diminishing returns

A post-AGI world would very likely also be a world of economic and material abundance. Due to technological advancement and the automation of the economy, the people who make decisions over how society goes will be millions or even billions of times richer than we are today.

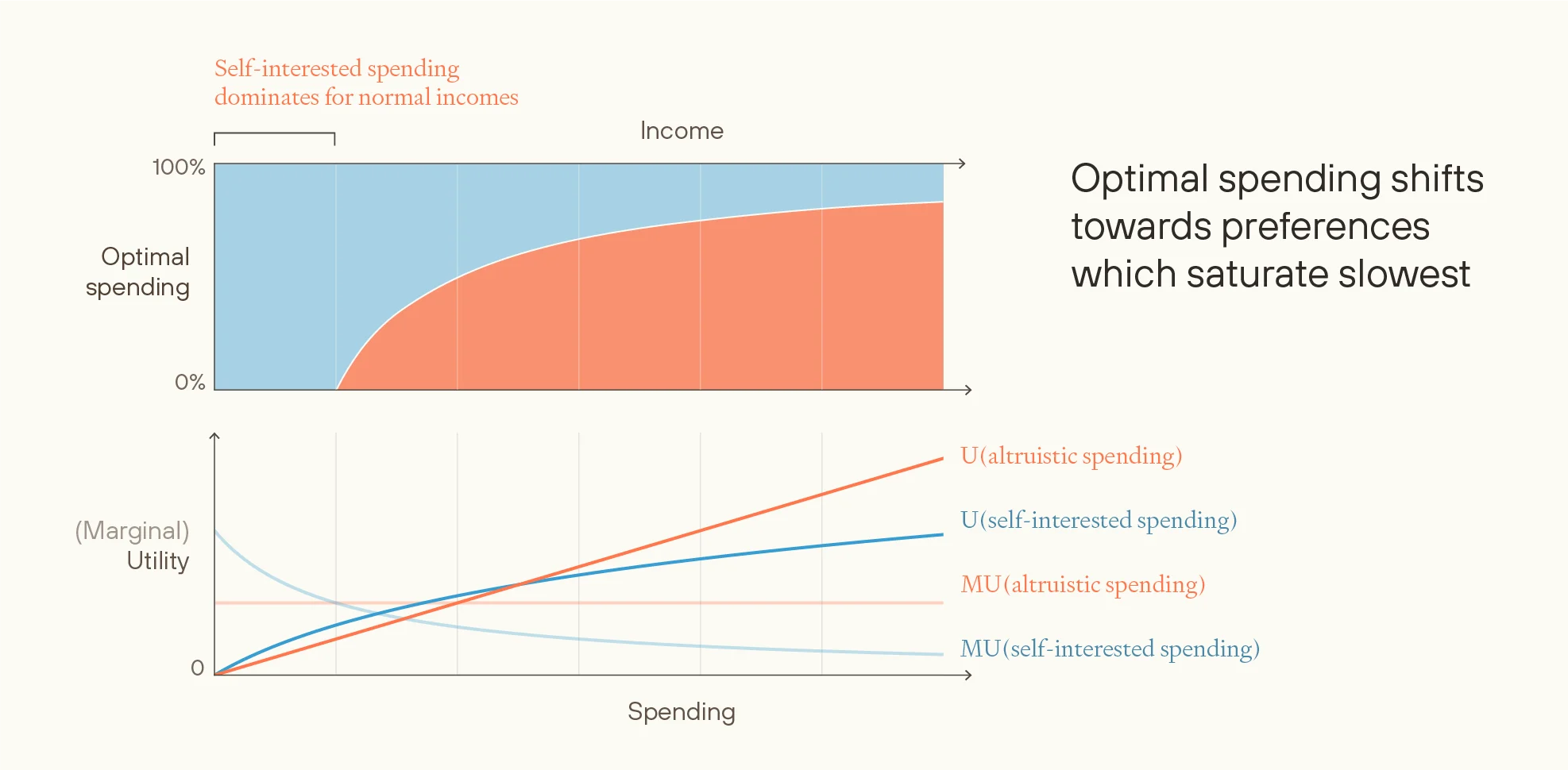

This suggests an argument for optimism about altruistic behavior. Most people have both self-interested and altruistic preferences. Self-interested preferences typically exhibit rapidly diminishing marginal utility, while altruistic preferences exhibit more slowly diminishing marginal utility (even up to linearity).18 Initially, when resources are scarce, people primarily satisfy self-interested preferences. But once wealth increases enough, people will shift marginal resources toward altruistic ends, since the marginal utility from additional self-interested consumption becomes smaller than the marginal utility from altruistic spending.

Because of this, if individuals have even a weak preference to promote the good, with extremely large amounts of resources they will want to use almost all their resources to do so.

Image

We think that this is a good argument for thinking that, if there are deliberate decisions about how the resources of the cosmos are to be used, then the bulk of those resources will be used to fulfill preferences with the lowest rate of diminishing marginal utility in resources (including linear or even strictly convex preferences). However, these preferences may not be altruistic at all, let alone the “right” kind of altruistic preferences.

There’s nothing incoherent about self-interested (or non-altruistic) preferences which are linear in resources. Some might prefer 2,000 years of bliss twice as much as 1,000 years of bliss, or prefer to have 2,000 identical blissful copies of themselves twice as much as having 1,000 copies. Others might have a desire to own more galaxies, just for their own sake, like collector’s items; or to see more and more shrines to their image, while knowing they don’t benefit anyone else. Or people might value positional goods: they might want to have, for example, more galactic-scale art installations than their cosmic rival does.

Currently, there’s very little correlation between wealth and proportional spending on charity and altruism; the correlation within rich countries might even be negative. Despite steeply diminishing returns to self-interested spending, even billionaires spend proportionally modest amounts on philanthropy. Total billionaire wealth is around $16 trillion: assuming a 5% real return gives $800B in income without drawing down their principal, but billionaire philanthropy amounts to around $50B/yr,19 around 6% of that income.20 That weakly suggests that even vastly increased wealth will not significantly increase the fraction of spending on altruistic ends, especially once more advanced technology provides greater scope to spend money in self-interested ways.

Alternatively, people could have ideological preferences whose returns diminish slowly or not at all, and that are not preferences for promoting the good (de dicto). As discussed, they could instead be operating on the assumption of some particular moral view, or according to unreflective preferences. Or, even if people are acting according to their reflective preferences of the good, those reflective preferences could have failed to converge, motivationally, onto the right values.

Overall, the argument that altruistic preferences are satisfied less quickly in resources does suggest to us that a larger share of resources will be spent altruistically in the future. But we don’t see it as a strong argument for thinking that most resources will be spent altruistically, or for giving us reason for thinking that such preferences will converge to the same correct place.

2.3.3. Long views win

Another way in which altruistic values might become dominant in the future is through asymmetric growth: without persuading or coercing other groups, certain values could be associated with faster growth in population or wealth, to the point where most living people embody those values, or most of the world’s resources are effectively controlled by those values.

Asymmetric growth might systematically favour altruistic values. For example, people face decisions about whether to consume now, or to save for the future (including for other people). As long as most people discount future consumption, then saving your wealth (by investing it productively) grows it proportionally. People with more impartial and altruistic views are likely to save more, because this is a way to eventually help more people, at the expense of immediate self-interest. Altruists might also choose to have more children for similar reasons. So, in the long run, free choices around saving and fertility might select for altruistic values.21 Perhaps people with these altruistic views are generally more willing to try to figure out and try to pursue the good de dicto. Then that would be a reason to be hopeful about widespread, accurate, and motivational convergence.

But, as with the last two sections, this isn’t a reason for thinking that motivation to promote the good de dicto will become predominant. Even if non-discounting values win out over time, those non-discounting values could be for many different things: they could be self-interested in a way that doesn’t discount one’s interests in time, or they could be based on a misguided ideology.

What’s more, non-discounting values might not have time to win out. If the major decisions are made soon, and then persist, then there just won’t be time for this selection effect to win out. (It could be that there are much faster ways for non-discounting views to control more of the future than discounting views, through trade. That idea will be discussed in section 3.)

Finally, of course, the kind of moral attitudes favoured by these asymmetric growth and other selection processes might just be wrong, or unconnected to other necessary aspects of pursuing the good de dicto. There are plenty of ways to be morally motivated, extremely patient, and totally misguided. Perhaps it’s right to discount future value!

2.4. An argument against WAM-convergence

In this section, we give an argument against expecting WAM-convergence, based on thinking through the implications of different meta-ethical positions. Stating it briefly, for now: if some form of moral realism is true, then the correct ethical view would probably strike most people as very weird and even alien. If so, then it’s unlikely that people will be motivated to act upon it, even if they learn what the correct moral view is; what’s more, if moral beliefs are intrinsically highly motivating, then people will choose not to learn or believe the correct moral view.

Alternatively, if some form of moral anti-realism is correct, then there is no objectively correct moral view. But if so, then there’s little reason to think that different people will converge to the exact-same moral views. Given our no easy eutopia discussion, that means that not enough people would converge on just the right moral views required to avoid losing out on most value, by the lights of their reflective preferences.

This was all stated in terms of realism and antirealism. We’ll continue to use these terms in the longer argument. But if you find the idea of moral realism confused, you could just think in terms of predictions about convergence: whether beings from a wide variety of starting points (e.g. aliens and AIs as well as humans) would, in the right conditions, converge on the same ethical views; or whether such convergence requires a close starting point, too (e.g. such that humans would converge with each other, but aliens and AIs wouldn’t); and whether, in those conditions under which you would expect convergence, you would endorse the views which have been converged upon.

2.4.1. Given moral realism

We’ll start by considering moral realism. Overall, we think that widespread, accurate, motivational convergence is more likely given moral realism. But it’s far from guaranteed. If moral realism is true, then the correct moral view is likely to be much more “distant” from humanity’s current preferences than it is today, in the sense that there’s much more likely to be a gap between what morality requires and what people would have wanted to do anyway. On realism, people in the future are more likely to have the same (correct) beliefs about what’s right to do, but they are less likely to be motivated to act on those beliefs.

This seems true on both “internalist” and “externalist” forms of realism. “Internalist” views of moral judgement understand belief in a moral claim to be essentially motivating: if you believe that stealing is morally wrong then (all other things being equal) you’ll be more averse to stealing than otherwise. If internalism about moral judgements is correct, then it’s more likely that moral convergence would be motivating. “Externalist” views of moral judgement reject that there’s any necessary connection between moral judgements and practical motivation: you could genuinely come to believe that stealing is morally wrong, but become no less averse to stealing.

Either way, we shouldn’t be confident that people will act on the correct moral beliefs. If internalism is the right view on moral judgements, then people might just prefer not to learn facts that end up motivating them to act against their personal interests. Maybe something like this is going on when we feel reluctant to be lectured about our obligations to donate money to charity. On the other hand, if externalism is the right view of moral judgements, then society could converge on the right moral views, but not necessarily be motivated to act on them. Maybe something like this is going on when we come to believe we have obligations to donate money to charity, but still don’t.

2.4.2. Given antirealism

Next, let’s see what follows if moral realism is false: if there aren’t any true mind-independent moral facts for society to converge on. We’ll focus in particular on subjectivism. (Though note that academic philosophers often refer to subjectivism as a form of realism, just a “non-robust” sort of realism). If subjectivism is true, then the gap between what morality requires and what people would have wanted to do anyway becomes much smaller. But it becomes much less likely that different people will converge.

On the simplest kind of subjectivism, an outcome A is morally better than another outcome B just if you prefer for A to happen rather than B.22 On this view, “accurate” moral convergence would just mean that prevailing views in society converge in a widespread and motivating way toward your own current preferences. But different people today, clearly, have very different preferences (including preferences which are indexical to them — i.e. they want themselves not to starve much more than they want people in general not to starve), so future society can’t converge towards all of them. So the simplest kind of subjectivist would have to say that “accurate” moral convergence is very unlikely.23

But there are more sophisticated kinds of subjectivism. For example, a subjectivist could view the right actions as those outcomes which some kind of idealised version of themselves would prefer. We could understand “accurate moral convergence” in terms of convergence towards some kind of idealised judgements. So accurate, widespread, motivational convergence might still be on the table.

The question here is whether the idealising processes that different people use all point towards the same (or very similar) views, especially about impartial betterness.

That would be true if there is some kind of objectively correct idealising process.24 But the idea that there’s some objectively correct idealising process seems inconsistent with the basic motivation for subjectivism — namely, suspicions about the idea of any notion of objective moral correctness. If you don’t endorse some alleged objective idealising process, why should you follow it, rather than the process you in fact prefer?

But if the idealising process is itself subjective — based on how you currently would like to reflect, if you could — then WAM-convergence seems unlikely. This is for two reasons.

First, there are a number of important “free parameters” in ethics, and it’s hard to see why different idealising processes, from different starting points, would converge on the same view on all of them. Suppose that two people converge all the way to both endorsing classical utilitarianism. This is not close to enough convergence for them to agree on what a mostly great future looks like. In order to get that, both people would need to agree on what hedonic wellbeing really is, and how precisely to bring about the most wellbeing with the time and resources available. Both people need to agree, for example, on what sizes and types of brains or other physical structures are most efficient at producing hedonic wellbeing. And, even assuming they agree on (say) a computational theory of mind, they also need to agree on what experiences are actually best.

But there must be a vast range of different kinds of experience which computation could support, including kinds never before experienced, hard to even discover, or impossible to experience while maintaining essential facts about your personal identity. And, given antirealism, there are no shared, obvious, and objective qualities of experiences themselves that indicate how valuable they are.25 So there’s no reason to expect significant convergence among antirealists about which experiences involve the most “efficient” production of “hedonic value”.26 Antirealists can appeal to “shared human preferences” as a reason for moral convergence. But such preferences are extremely underpowered for this task — like two people picking the same needle out of an astronomical haystack.27,28

And, once we move beyond assuming classical utilitarianism, there are many other “free parameters”, too. What all-things-considered theory of welfare is correct, and to what precise specification? What non-welfarist goods, if any, should be pursued? How should different goods be traded off against each other? If there are non-linear functions describing how value accrues (e.g., diminishing returns to certain goods), what is the exact mathematical form of these functions? Each of these questions allows for a vast space of possible answers, and we don’t see why different subjective reflective processes, starting from different sets of intuitions, would land on the same precise answers.

Second, the idealising procedure itself, if subjective, introduces its own set of free parameters. How does an individual or group decide to resolve internal incoherencies in their preferences, if they even choose to prioritize consistency at all? How much weight is given to initial intuitions versus theoretical virtues like simplicity or explanatory power? Which arguments are deemed persuasive during reflection? How far from one's initial pre-reflective preferences is one willing to allow the idealization process to take them?29

If moral antirealism is true, then the bare tools of idealisation — like resolving inconsistencies and factual disagreements — are greatly underpowered to guarantee convergence. A subjectivist shouldn’t hold out hope that society converges on a view they themselves would endorse.30

2.5. Blockers

Even if, in a reasonably good scenario, there would be widespread, motivational, accurate convergence, we might still not get to that sufficiently good scenario. I’ll call the ways in which society might fail to get to such a scenario blockers. One clear blocker is the risk of extinction. But there are other potential blockers, too, even if we survive.

First, there is the risk that humanity will not choose its future at all. In this scenario, the trajectory of the long-term future is best explained as being the outcome of evolutionary forces, rather than being the outcome of some sort of deliberative process. Even if nearly everyone is motivated by the same moral view, still society could collectively fail to bring it about.31

Second, people in the future could have the wrong non-moral views, especially if those views have greater memetic power (in some circumstances) than the correct views.32 Some ideas might even be so memetically potent that merely considering the idea makes an individual highly likely to adopt it; such ideas might also discourage further change, and become impossible to get out of, like epistemic black holes.

Third, there could be early lock-in: when the most-important decisions are being made, the decision-makers at the time are unable to bring about a mostly-great future, even if they tried. We’ll discuss lock-in more in the next essay.33

Of course, there could also be additional and as-yet unknown blockers.

3. What if some people aim at the good?

In this section, we’ll consider the possibility that (i) under reasonably good conditions, some meaningful fraction of people (weighted by the power they have) would converge on the correct moral view and would be motivated to use most of the resources they control towards promoting the good (de dicto), and (ii) those people will be able to bargain or trade with each other, such that we’ll get to a mostly-great future. By “some meaningful fraction” we mean a minority of all people, but not less than (say) one in a million people.34 So understood, we’ll call idea (i) partial AM-convergence.

In this section, we’ll discuss whether bargaining and trade will be enough to reach a mostly-great future, given partial AM-convergence.

In section 3.1, we’ll illustrate how such “moral trade” could work, and discuss the conditions under which such trade could occur. In section 3.2, we discuss the conditions under which trade could enable a near-best future, and in section 3.3 we discuss the problems of threats. In section 3.4, we put these considerations together, depending on whether the correct moral view is unbounded or bounded, whether bads weigh heavily against goods, and whether bads and goods are aggregated separately or not. In section 3.5, we discuss blockers to a mostly great future via AM-convergence and trade.

3.1. Trade and compromise

Imagine that a multi-millionaire has a niche but intense wish to own every known Roman coin with a portrait of Julius Caesar. He might not be able to own every coin: some are kept in museums, some private owners are unwilling to sell for sentimental reasons. But he might manage to acquire most Julius Caesar coins in the world, despite controlling a small fraction of the world’s wealth. When he paid each collector for their coin, they preferred his money to their coin, and the multi-millionaire preferred the coin, so each party was glad to make the trade.

Someone could trade for moral reasons, too. You could find some voluntary exchange where you (or both parties) are motivated by making the world a better place. For the price of a caged bird, for example, you can pay to set a bird free. For the price of a plot of Amazonian land, you can save that land from being deforested. But even better bargains are on offer when you have moral reasons to care far more about some outcome than your counterparty. You could (in theory) pay a retailer to discount alternatives to animal products, for example. Or both parties could trade for moral reasons: Annie cares a lot about recycling and Bob cares a lot about littering, so Bob might agree to start recycling if Annie stops littering, and both parties agree the world is now a better place. This is the promise of moral trade.35

In the future, there could be potential for enormous gains from trade and compromise between groups with different moral views. Suppose, for example, that most in society have fairly common-sense ethical views, such that common-sense utopia (from the last essay) achieves most possible value, whereas a smaller group endorses total utilitarianism. If so, then an arrangement where the first group turns the Milky Way into a common-sense utopia, and the second group occupies all the other accessible galaxies and turns them into a total utilitarian utopia, would be one in which both groups get a future that is very close to as good as it could possibly be. Potentially, society could get to this arrangement even if one group was a much smaller minority than the other, via some sort of trade. Through trade, both groups get a future that is very close to as good as it could possibly be, by their lights.

Some reasons for trade might become less relevant in a more technologically advanced society. For example, with superintelligent advice, investors might less often have differing expectations about the future, which would be a reason for trading volume to decline (all else equal).36

But other reasons for trade would likely remain equally or more relevant, even in a technologically mature society. Different groups could continue to:

- Value different natural resources

- For example, some groups might want star systems that contain rocky planets in the habitable zone to live on; other groups might want to preserve particularly beautiful areas of the cosmos; etc.37

- Value different locations of resources

- For example, some groups might intrinsically value resources in our own solar system more than those in distant galaxies; other groups might be indifferent.

- Have different rates of pure time preference

- Some groups might value being able to acquire tradeable goods soon (such as to benefit currently-living people), while others might be indifferent about when they acquire or use them.

- Have different attitudes to risk, when some risk is ineliminable

- For example, even for an extremely technologically advanced society, it might be impossible to know for sure whether a distant galaxy will have been settled by aliens or not.

In many cases, two views can be “resource-compatible”, meaning there is some way to almost fully satisfy both views with the same resources, even if the best use of the resources on one view is mostly worthless to the other view, such that the gains from trade between two moral views controlling comparable resource shares could be large from each view’s perspective. For example: hedonists might only care about bliss, and objective list theories might care primarily about wisdom; they might potentially agree to create a shared society where beings are both very blissful and very wise. Abstractly speaking: the same resources can sometimes achieve much more value on two different views if they are all devoted to some “hybrid good” than if the resources were simply split evenly between the two views without the possibility of bargaining or trade.

What’s more, some of the reasons why we don’t get trade today would no longer apply. As discussed in the next essay, superintelligence could enable iron-clad contracts, which could avoid the problem of a lack of mutual trust. And transaction costs would generally be extremely small relative to the gains, and more potential trades would be salient in the first place, given an enormous number of superintelligence delegates able to spend abundant time in order to figure out positive-sum arrangements.

3.2. Would trade enable a mostly-great future?

In many cases, it looks like there will be the potential for truly enormous gains from moral trade. This is a significant cause for optimism. For example, even if most people care little about the welfare of digital beings, if it’s sufficiently low-cost for them to improve that welfare, the minority who does care about digital welfare would be able to bargain with them and increase their welfare considerably.

More generally, we can consider some key possibilities relating to moral trade:

- The extent to which the hypothetical gains from frictionless trade are actually realised.

- The (moral) gains from trade from all trades that in fact take place.38

- The (moral) gains from trade for the correct view.39

- The value of the world, on the correct moral view, after trade.40

What ultimately matters is (4), the value of the world on the preferred view after trade. But if you are uncertain over moral views, then you should also be interested in (2): the extent to which most views gain from trade. Of course, (2)–(4) depend on (1), whether possible gains from trade are actually realised. That could depend on whether, for example, the right institutions exist to support trade, and also the extent to which different views actually take trades which improve the world by their lights, which isn’t guaranteed.41

However, mutual gains from trade seems especially unlikely to us if the prevailing views are non-discounting and linear-in-resources. It’s possible in principle, because of the potential for hybrid goods. But if the ways to achieve maximum value/cost on each view are both highly particular, then it’s unlikely any compromise could achieve much more value (by the lights of each view) than if each view kept their resources for themselves.

In fact, there may even be a “narcissism of small differences” effect: if two views agree on the importance of the very same domains or aspects of the world, but disagree over what to do with them, then the gains from compromise could be smaller, despite the views seeming superficially similar. Suppose Annie and Bob agree on the importance of spiritual worship, but disagree over which deity to worship. By contrast, Claudia mostly cares about the environment. Although Annie and Bob’s views are superficially similar, the mutual gains from moral trade between Claudia and either of Annie and Bob are likely larger than those between Annie and Bob, because Annie and Bob both agree there is no “hybrid” activity of worshipping two deities at the same time.

Given this, it becomes crucial to know: assuming that the right moral view is non-discounting and linear-in-resources, how much control over resources is such a view likely to have, before trade?

The case for thinking that non-discounting and linear moral views are likely to begin with a significant share of resources is that, currently, such cosmic-scale values are very unusual, and are unusually likely to be held by the altruistically-minded. Of course, altruistically-minded people disagree widely today, so we’d also have to hope for significant AM-convergence among those people after some period of reflection. The counterargument is that, in a technologically advanced society, many more types of people will have views that are non-discounting and linear, for two reasons. First, superintelligence-aided reflection might plausibly cause people to be much more likely to adopt views that are non-discounting and linear. Second, as we saw in section 2.3.2., enormous wealth might cause people to shift focus towards their preferences which diminish most slowly with resources, even if those preferences are self-interested.

Putting this together, it’s hard to know what fraction of resources the correct view will control among all other non-discounting and linear views, assuming that the correct moral view has those properties. Here’s a rough guess: if (at the relevant period of bargaining) 1 in people out of everyone have altruistic preferences, then the fraction of those people among everyone with approximately linear preferences of any kind, is something like 10 in .

3.3. The problem of threats

If trade and bargaining are reasons for optimism, then threats could undermine that optimism. Suppose that Alice and Bob want different things, and bargain with each other. Alice could extort Bob by credibly committing to make the world worse on Bob’s view, unless Bob makes concessions to Alice. In the previous examples, both parties to a trade can agree to the trade itself, and also agree the world is made no worse-off by whatever commitment or enforcement mechanisms enabled the trade42 in the first place. Threats are different. When one party carries out a threat, they may both agree to the terms of the threat, but at least one party might view the world as made worse-off by the threat having been made, regardless of whether they capitulated to it. Imagine Bob cares a lot about animals being mistreated, and Alice doesn’t. Then Alice can threaten to mistreat lots of animals to extort resources from Bob for uses he doesn’t value at all. Then, whatever Bob chooses, he’ll likely view the world as worse than it would have been if Alice had never been in the picture.43

The extent of public writing on threats is very limited;44 sometimes just learning about the topic can make it more likely for threats to occur, so people are naturally reluctant to spread their research widely. We ourselves have not particularly dug into this issue, despite its importance.

But, significantly, even small risks of executed threats can easily eat into the expected value of worlds where many groups with different values are able to bargain with each other. On many views, bads weigh more heavily than goods: this is true on negative-leaning views, or on views on which value is meaningfully bounded above but not meaningfully bounded below, and goods and bads should be aggregated separately; or simply on empirical views where it turns out some bads are much cheaper to create with the same resources than any compensating goods. If so, then even if only a small fraction of resources are devoted to executed threats, most of the value of the future could be lost;45 if a large fraction of resources are devoted to executed threats, then most value could be lost even if the correct view is not negative-leaning.46 What’s more, those who hold the correct moral view may be less likely to themselves threaten other groups, so even if no threats are ever executed, those people could still lose most of their resources via extortion. Finally, it’s not obvious to us that some kind of legal system which reliably prevents value-undermining threats would be mutually agreeable and stable, so the worry does not only apply to legal ‘anarchy’ between views.47

3.4. Putting it all together

How much more optimistic should the idea of trade and compromise make us? We can give an overall argument by looking at different types of moral view, using some of the distinctions we covered in the last essay, No Easy Eutopia, section 3.

If executed moral threats amount to a small but meaningful fraction of future resource use, then if the correct view:

- Is bounded above, and goods and bads are aggregated jointly, and if bads don’t weigh very heavily against goods, a mostly-great future seems likely.

- Is bounded above, and goods and bads are aggregated jointly, and bads do weigh heavily against goods, then a mostly-great future seems unlikely, because the executed threats eat into the value of the goods.

- Is bounded above, and goods and bads aggregated separately, then a mostly-great future seems unlikely.

- If the view is bounded below (with a compensating or greater magnitude to the lower bound), then future society is likely to reach both upper and lower bounds, and we will end up with a future that’s at most of net zero value.

- If the view is unbounded below, or the magnitude of the lower bound is much greater than the magnitude of the upper bound, then the bads will outweigh the goods, and we’ll probably end up with a net-negative future.

- Is unbounded above, then even if bads don’t weigh heavily against goods, a mostly-great future seems uncertain.

- If the correct view is unusually resource-compatible with other prevailing views, then a mostly-great future is plausible. But resource-compatibility between linear views seems unlikely, and if so then a mostly-great future seems unlikely, too.

- Is unbounded above, and bads do weigh heavily against goods, then a mostly-great future is unlikely, because executed threats eat into the value of the goods.

If value-destroying threats can be prevented, then things seem more optimistic. In this scenario, then it’s just on the linear views that we don’t reach a mostly-great future via compromise and trade.

We think it’s appropriate to be highly uncertain about which axiological view is correct. Given that, it’s worth considering what the value of the future looks like, from our uncertain vantage point. Two things stand out. First, most views we’ve considered are sensitive to value-destroying executed threats, which suggests we should try hard to prevent such threats, even if doing so is itself costly. Second, some views are highly resource-compatible with others; in cosmic terms it could be very inexpensive to achieve a near-best future for many of those views by giving even just a small fraction of all resources to views that are easily-satiable.48 We should aim to do so.

3.5. Blockers to trade

The scenarios where we get to a mostly-great future via trade and compromise face the same major blockers that we discussed in section 2.5, and two other blockers, too. First, concentration of power. If only a small number of people have power, then it becomes less likely that the correct moral views are represented among that small group, and therefore less likely that we get to a mostly-great future via trade and compromise.

Second, even if power is not concentrated, the most valuable futures could be sealed off. We already saw one way this could be so: if people make and execute value-destroying threats. But there are a lot of other ways, too. For example, if decisions are made by majority rule, then the majority could ban activities or goods which they don’t especially value, but which minority views value highly.49

Alternatively, collective decision-making procedures can vary in the extent to which they elicit decisions made on the basis of good reasons versus other things. Perhaps decisions are made democratically, but such that the incentives favour voting in order to signal allegiance to a social group. And even if some collective decision-making process is designed to give everyone what they want, that can be very different from a decision-making process that is the best at aggregating everyone’s best guesses at what the right decision is; these two broad approaches can result in very different outcomes.50

Even the same decision-making procedure can give very different results depending on when the decision occurs. If, for example, Nash bargaining were to happen now, then people with quasilinear utility might get most of what they want just by getting the goods that have heavily diminishing utility for them. But, once such people are already very rich, their preferences for additional goods would be linear, and couldn’t be so easily satisfied.51 And, of course, the outcome of any bargaining process depends sensitively on the power distribution among the different bargainers, and on what would happen if no agreement occurs; this is something that can vary over time.

The more general point is that the outcomes we get can vary greatly depending on which collective decision-making processes are used. Some such processes might well be predictably much better than others.

4. What if no one aims at the good?

In sections 2 and 3, we looked at scenarios where at least some people were deliberately optimising towards the good (de dicto). But let’s now discuss whether this is really needed. Perhaps we can hit a narrow target even if no one is aiming at that target.

The key argument against such a position is that it would seem like an extraordinary coincidence if society hit the narrow target without explicitly trying to do so. So there needs to be some explanation of why this isn’t a coincidence.

There are possible explanations. In terms of our analogy of sailing to an island: perhaps none of the shipmates intrinsically care about reaching the right island, but do care about their own salary, and get paid only if they do their job. If all the shipmates just do their job well enough then the ship reaches the island: the shipmates’ desire for their salary steers the ship in the right direction. Alternatively, consider again that flight evolved in animals, more than once, even though no agent tried to optimise for that target; flight evolved because it was useful for maximising reproductive fitness.

4.1. If most aim at their self-interest

First, let’s consider cases where (i) nobody is aiming at the good (de dicto), and (ii) most people are pursuing their own local interests, but (iii) absent blockers, most people converge on the right view of what’s best for them. Couldn’t that still be enough to reach a mostly great future?

If there’s some strong correlation between what’s good for every individual and what’s good overall, then it isn’t a coincidence that people pursuing what’s best for them results in what’s near-best overall.

Consider an analogy with material wealth. Historically, most people have been motivated to pursue material wealth for themselves and their circle of concern (like their loved ones or family). Very few people across history seem to have been motivated to pursue material wealth for the entire world, or across the entire future, or even for their own countries. And yet, humanity at large is vastly more materially wealthy than it was even a few centuries ago. “It is not from the benevolence of the butcher, the brewer, or the baker, that we expect our dinner,” wrote Adam Smith, “but from their regard to their own interest.”

How did this happen? One happy fact is that two parties can both become richer, in the long run, by engaging in forms of collaboration and coordination. This is true at many levels of organisation — from small groups, through to national systems of law and commerce, through to international trade and coordination. Perhaps, if most people converge on wanting what’s ultimately good for them, the same trend could enable people to increasingly improve their own lives, and that could be enough to make the future mostly-great.

To be sure, given cognitive and material abundance, people will probably and in general get much more of what they self-interestedly want than they do today. But the crucial question is whether people pursuing what’s best for themselves is a strong enough driver towards what’s best overall. Otherwise, again, a world driven by self-interest could become staggeringly better than the world today, but still only a fraction of how good it could be, for want of a few crucial components.

On some moral and meta-ethical views, the overlap between the aggregate of individual self-interest and overall value might be strong enough. Consider a view on which (i) totalising welfarism is the correct axiological view; and (ii) “meta-ethical hedonism” is correct, in the sense that goodness is a property of conscious experiences that we have direct access to, and coming into contact with that property makes it more likely that the experiencer will believe that that experience is good.52 On such a view, it becomes more plausible that everyone pursuing their own self-interest will converge on enjoyment of the same kind of highly valuable experiences, and that such a world is near-best overall. If so, everyone pursuing their own self-interest could well lead toward a very similar outcome than if they were all motivated instead to promote the moral good.53

We think these views have some merit, but we don’t think you should be at all confident in either of them. And, even if we accept them, people pursuing their own self-interest is only sufficient to create a mostly-great future if the most valuable experiences can be experienced by those people. But, plausibly this is wrong, if the very best experiences are so alien that most people initially pursuing their self-interest cannot themselves experience them; similar to how a bat can’t comprehend what it is like to be a human without ceasing to be a bat. If so, most people can only bring about the best experiences for some other kind of person, not for themselves. So most people would not pursue them only out of self-interest.54

And if we drop either of these views, then the correlation between self-interest and the overall good is just not strong enough. People could be motivated to improve their own lives, but not to create enough additional good lives, if doing so doesn’t also benefit them. They could even be motivated to create low-value or even bad lives (e.g. digital servants) if doing so does benefit them. Or some aspects of value of the future might not be good for anyone, like the possibly intrinsic value of nature or beauty. Or some things could even be locally good, but bad overall — for example, on some egalitarian views, making already well-off people even better off can be bad because it increases inequality.

People could also fail to pursue their own good. If there is a single correct conception of the prudential (self-interested) good, then many of the arguments for no easy eutopia would also suggest that the target for prudential good is also narrow, not obvious, and non-motivational perhaps even after reflection. Suppose, for example, that hedonism about wellbeing is correct; nonetheless, people might just prefer not to turn themselves into machines for generating positive experiences — perhaps reasonably.

With these points in mind, we can see that the analogy between individual and total good, and creation of individual and global wealth, could be very misleading. Wealth is held and generated by people, but there could be forms of value which are not good for anyone, like the value of nature. The instrumental value of money is obvious, but what is ultimately good for somebody might not be. Moreover, it’s not even clear that self-interest would succeed in generating nearly as much wealth, in the long run, as some termite-like motivation to generate global wealth per se.

4.2. If other motivations point towards good outcomes

One way in which it wouldn’t be necessary for most people in society to aim at the good de dicto is if intrinsically valuable goods are also instrumentally valuable on a wide range of other moral views. For example, perhaps some of the following goods are intrinsically valuable: the acquisition of knowledge; the proliferation of life; the proliferation of complex systems; feats of engineering; preference-satisfaction.

Whatever goals people have in the future, their plans are likely to involve at least some of acquiring knowledge, proliferating life and complex systems, achieving feats of engineering, and satisfying their own preferences and the preferences of those beings they create. So if at least some of these things are good in and of themselves, then the future will be better, even if future people are creating those goods only as a means to some other end.

However, the question is whether this is sufficient to get us to a mostly-great future. And, again, that looks unlikely. People who only care about some goods for instrumental reasons are likely to produce less of them compared to people who intrinsically care about accumulating those goods. As an analogy, if Alice intrinsically cares about owning as many books as possible, and Bob values books because he finds it useful to be well-read, then Alice is likely to end up owning far more books (including books she will never read) than Bob ever will. The situation becomes worse again if the correct moral view intrinsically values some non-instrumentally valuable good even more than it values the instrumentally valuable goods, or if the correct moral view intrinsically disvalues some instrumentally valuable goods.

The intrinsic value of some instrumental goods might be a reason for thinking that the future is very good in absolute terms; but, given easy eutopia, the correlation between instrumental and intrinsic value just doesn’t seem strong enough to get us to a mostly-great future.

5. Which scenarios are highest-stakes?

In response to the arguments we’ve given in this essay, and especially the reasons for pessimism about convergence we canvassed in section 2, you might wonder if the practical upshot is that you should pursue personal power-seeking. If a mostly-great future is a narrow target, and you don’t expect other people to AM-converge, then you lose out on most possible value unless the future ends up aligned with almost exactly your values. And, so the thought goes, the only way to ensure that happens is to increase your own power by as much as possible.

However, we don’t think that this is the main upshot. Consider these three scenarios:

- Even given good conditions, there’s almost no AM-convergence between any sorts of beings with different preferences.

- Given good conditions, humans generally AM-converge on each other; aliens and AIs generally don’t AM-converge with humans.

- Given good conditions, there’s broad convergence, where at least a reasonably high fraction of humans and aliens and AIs would AM-converge with each other.

(There are also variants of (2), where “humans” could be replaced with “people sufficiently similar to me”, “co-nationals”, “followers of the same religion”, “followers of the same moral worldview” and so on.)

Though (2) is a commonly held position, we think our discussion has made it less plausible. If a mostly-great future is a very narrow target, then shared human preferences are underpowered for the task of ensuring that the idealising process of different humans goes to the same place. What would be needed is for there to be something about the world itself that would pull different beings towards the same (correct) moral views: for example, if the arguments are much stronger for the correct moral view than for other moral views, or if the value of experiences is present in the nature of experiences, such that by having a good experience one is thereby inclined to believe that that experience is good.55

So we think that the more likely scenarios are (1) and (3). If we were in scenario (1) for sure, then we would have an argument for personal power-seeking (although there are plausibly other arguments against power-seeking strategies; this is discussed in section 4.2 of the essay, What to do to Promote Better Futures). But we think that we should act much more on the assumption that we live in scenario (3), for two reasons.

First, the best actions are higher-impact in scenario (3) than in scenario (1). Suppose that you’re in scenario (1), that you currently have 1 billionth of all global power,56 and that the future is on track to achieve one hundred millionth as much value as if you had all the power.57 Perhaps via successful power-seeking throughout the course of your life, you could increase your current level of power a hundredfold. If so, then you would ensure that the future has one millionth as much value as if you had all the power. You’ve increased the value of the future by one part in a million.

But now suppose that we’re in scenario (3). If so, you should be much more optimistic about the value of the future. Suppose you think, conditional on scenario (3), that the chance of Surviving is 80%, and that Flourishing is 10%. By devoting your life to the issue, can you increase the chance of Surviving by more than one part in a hundred thousand, or improve Flourishing by more than one part in a million? It seems to me that you can, and, if so, then the best actions (which are non-powerseeking) have more impact in scenario (3) than power-seeking does in scenario (1). More generally, the future has a lot more value in scenario (3) than in scenario (1), and one can often make a meaningful proportional difference to future value. So, unless you’re able to enormously multiply your personal power, then you’ll be able to take higher-impact actions in scenario (3) than in scenario (1).

A second, and much more debatable, reason for focusing more on scenario (3) is that you might just care about what happens in scenario (3) more than in scenario (1). Will’s preferences, at least, are such that things are much lower-stakes in general in scenario (1) than they are in scenario (3): he thinks he’s much more likely to have strong cosmic-scale reflective preferences in scenario (3), and much more likely to have reflective preferences that are scope-sensitive and closer to contemporary common-sense in scenario (1).

6. Conclusion

This essay has covered a lot of different considerations, and it’s hard to hold them all in mind at once. Remember, we started the essay on the assumption that mostly-great futures are a very narrow target. On the face of it, that suggests pessimism about whether the future will be mostly-great. Overall, we think the considerations in this essay warrant significantly more optimism than that initial impression.

Still, we don’t think the points in this essay establish that a mostly-great future is likely, even in the absence of blockers. Given moral antirealism, the common core of “human values” doesn’t seem specific or well-powered enough to make WAM-convergence look likely. And even if there is some attractive force towards the best moral views, like moral realism would suggest, still it might not be sufficiently motivating. And even if there would be WAM-convergence under good enough conditions, we might not reach those conditions. So widespread, accurate, and motivational convergence looks unlikely.

The potential of scenarios involving partial AM-convergence, plus trade or compromise, seems more realistic and promising to us. But there are major obstacles along that path too, including concentration of power and poor collective decision-making processes. Moreover, value-destroying threats could rob these scenarios of most of their value, or even render them worse than extinction.

Backing up even further, on the basis of all this discussion, we think that the hypothesis from the first essay — that we are far from the ceiling on Flourishing — seems right. Among problems we might consider, those that hold the future back from flourishing therefore seem greater in scale than risks to our survival. But is there anything we can do about them? In the next two essays, we turn to that question.

Bibliography

Guive Assadi, ‘Will Humanity Choose its Future?’.

Tobias Baumann, ‘Using surrogate goals to deflect threats’, Center on Long-Term Risk, 20 February 2018.

Maarten Boudry, ‘On epistemic black holes: How self‐sealing belief systems develop and evolve’, 2023.

Richard Boyd, ‘How to Be a Moral Realist’, Essays on moral realism, 1988.

David Owen Brink, ‘Moral Realism and the Foundations of Ethics’, 1989.

Joe Carlsmith, ‘On the limits of idealized values’, 2021.

Paul Christiano, ‘Why might the future be good?’, Rational Altruist, 27 February 2013.

Jesse Clifton, ‘Preface to CLR’s Research Agenda on Cooperation, Conflict, and TAI’, 13 December 2019.

Francis Fukuyama, ‘The End of History and the Last Man’, 17 September 2020.

Robin Hanson and Kevin Simler, ‘The Elephant in the Brain: Hidden Motives in Everyday Life’, 2017.

J. Michael Harrison and David M. Kreps, ‘Speculative Investor Behavior in a Stock Market with Heterogeneous Expectations’, The Quarterly Journal of Economics, 1 May 1978.

Douglas Hofstadter, ‘Heisenberg’s Uncertainty Principle and the Many Worlds Interpretation of Quantum Mechanics’.

Joshua Conrad Jackson and Danila Medvedev, ‘Worldwide divergence of values’, Nature Communications, 9 April 2024.

William MacAskill, ‘What We Owe the Future: A Million-Year View’, 2022.

Toby Ord, ‘Moral Trade’, Ethics, 2015.

Peter Railton, ‘Facts, Values, and Norms: Essays toward a Morality of Consequence’, 2003.

Michael Smith, David Lewis, and Mark Johnston, ‘Dispositional Theories of Value*’, Aristotelian Society Supplementary Volume, 1 July 1989.